Last week, Stephen Fry called Zuckerberg and Musk the "worst polluters in human history", but he wasn't talking about the environment.

The self-professed technophile, who once so joyfully quarried the glittering bounty of social media, has turned canary, warning directly and urgently of the stench he now detects in the bowels of Earth's digital coal mine: A reek of digital effluvia in the "air and waters" of our global culture.

The long arc of Fry's journey from advocate to alarmist is important. The flash-bang of today's "AI ethics" panic has distracted our moral attention from the duller truth that malignant "ethical misalignment" has already metastascised into every major organ of our digital lives.

*

In 2015, Google's corporate restructure quietly replaced the famous "Don't be evil" motto with a saccharine, MBA-approved fascimile. It seems the motto was becoming a millstone, as it allowed critics to attack not just the effects, but also the morality, of mass surveillance, tax avoidance and anti-competitive behaviour. Facing the question of why a company with the motto "Don't be evil" was doing highly profitable, but potentially evil, acts, Google ditched the motto. The acts continued. (more on "Do no evil" later)

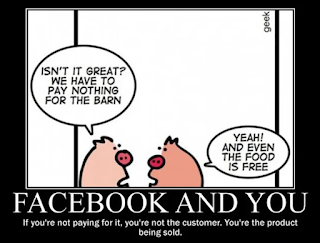

In 2018, reporting revealed that Facebook's custom-built data-harvesting "Facebook Platform" had been used by Cambridge Analytica to... harvest data. The news tanked the stock price by $119B and cost $725 million in compensation payouts. Ethically, the Pandora's box of monetising human privacy had been flung open. The digital social contract, where users could trust creators to build products with them in mind, was in tatters. The user was now the product.

The shift in the balance of moral fealty, from users to advertisers, was profound. Product teams turned vast factories of intellectual horsepower not towards the task of improving users' lives, but instead to converting attention, habits and trust into clicks and ads. By abdicating responsibility for their users, the princelings of social media created a moral vacuum into which slunk the dark patterns of attention-capture, trammel nets and basket sneaking.

Far more sinister, however, was the creeping, passive acceptance of a new ethical norm. As every industry digitised, the absence of accountability for human harm quietly calcified in the digital systems of our society.

*

Last year the Australian Robodebt Royal Commission added the moral valences of "cruel and unfair" to the "crude and illegal" criticisms of a system which impacted half a million people, driving at least 3 to suicide.

In the UK, the Horizon Postmaster scandal continues, with the recent exoneration of those prosecuted and imprisoned on the basis of system faults coming too late for at least 4 sub-postmasters who had already taken their own lives.

Truly, those perched on the top of the AI hype-cycle, searching tomorrow's horizon for the potential future harms of AI "ethical misalignment" may glance down in dismay. Our systems are already killing us.

*

However, where does accountability lie? The minister's policy was reasonable, the project plan detailed, product design effective, code validated and operators well-trained. Like a chain of well-meaning neighbours passing a leaky bucket hand-to-hand from lake to fire, everyone played an earnest part in achieving no good outcome. It seems the infinite complexity of modern system development is so vast a solvent, that moral accountability dissolves into it unnoticed.

Government regulation cannot work.

The uniquely grand pace and reach of digital innovation is matched almost tautologically by its invulnerability to regulation. Government will always arrive late and underprepared, imposing mostly obsolete and trivially circumventable controls.

In March 2018, following the Cambridge Analytica scandal, Zuckerberg pledged to do more to increase privacy protections. A month later, he moved 1.5 billion user accounts to US servers to avoid the increased privacy protections of Europe's GDPR law.

Industry regulation can't be it, either.

Lawyers, engineers, doctors, scientists, accountants - all have ethics boards to which they can be held professionally accountable. There is no software engineering ethics board, nor can there be one. The ComputerEthics subreddit has fewer subscribers than I have LinkedIn followers.

No, I think Fry has it right. "All people power... has to be brought to bear".

In a digital parallel to the global rise of environmental awareness, our zeitgeist must drag into the Overton window a public outcry against the intended and unintended human harm of bad - in both senses, evil and poor - systems.

The murmurs have already begun, with engaging advocates such as Tristan Harris and the Centre for Humane Technology, Cory Doctorow and Jaron Lanier. A rumble of project managers, product designers, software developers and operators taking accountability for the outcomes of their systems may follow, until the roar can be heard even in the rarefied air of corporate headquarters. One day, only building systems for human good will be good business.

*

In a modern spin on a classic quote, Fry's "Corporations are more interested in developing the capabilities of AI than its safety" recalls the seminal "Your scientists were so preoccupied with whether or not they could, they didn't stop to think if they should".

If you are interested in digital morality and ethics, I will be publishing more at: http://ifweshould.com

Comments

Post a Comment